Designing and Testing PyZMQ Applications – Part 1

Posted on

ZeroMQ (or ØMQ or ZMQ) is an intelligent messaging framework and described as “sockets on steroids”. That is, they look like normal TCP sockets but actually work as you’d expect sockets to work. PyZMQ adds even more convenience to them, which makes it a really a good choice if you want to implement a distributed application. Another big plus for ØMQ is that you can integrate sub-systems written in C, Java or any other language ØMQ supports (which are a lot).

If you’ve never heard of ØMQ before, I recommend to read ZeroMQ an Introduction by Nicholas Piël, before you go on with this article.

The ØMQ Guide and PyZMQ’s documentation are really good, so you can easily get started. However, when we began to implement a larger application with it (a distributed simulation framework), several questions arose which were not covered by the documentation:

- What’s the best way do design our application?

- How can we keep it readable, flexible and maintainable?

- How do we test it?

I didn’t find something like a best practice article that answered my questions. So in this series of articles, I’m going to talk about what I’ve learned during the last months. I’m not a PyZMQ expert (yet ;-)), but what I’ve done so far works quite well and I never had more tests in a project than I do have now.

You’ll find the source for the examples at bitbucket. They are written in Python 3.2 and tested under Mac OS X Lion, Ubuntu 11.10 and Windows 7, 64 bit in each case. If you have any suggestions or improvements, please fork me or just leave a comment.

In this first article, I’m going to talk a bit about how you could generally design your application to be flexible, maintainable and testable. The second part will be about unit testing and the finally, I’ll cover process and system testing.

Comparison of Different Approaches

There are basically three possible ways to implement a PyZMQ application. One, that’s easy, but limited in practical use, one that’s more flexible, but not really pythonic and one, that needs a bit more setup, but is flexible and pythonic.

All three examples feature a simple ping process and a pong process with varying complexity. I use multiprocessing to run the pong process, because that’s what you should usually do in real PyZMQ applications (you don’t want to use threads and if both processes are running on the same machine, there’s no need to invoke both of them separately).

All of the examples will have the following output:

(zmq)$ python blocking_recv.py

Pong got request: ping 0

Ping got reply: pong 0

...

Pong got request: ping 4

Ping got reply: pong 4

Let’s start with the easy one first. You just use on of the socket’s recv methods in a loop:

# blocking_recv.py

import multiprocessing

import zmq

addr = 'tcp://127.0.0.1:5678'

def ping():

"""Sends ping requests and waits for replies."""

context = zmq.Context()

sock = context.socket(zmq.REQ)

sock.bind(addr)

for i in range(5):

sock.send_unicode('ping %s' % i)

rep = sock.recv_unicode() # This blocks until we get something

print('Ping got reply:', rep)

def pong():

"""Waits for ping requests and replies with a pong."""

context = zmq.Context()

sock = context.socket(zmq.REP)

sock.connect(addr)

for i in range(5):

req = sock.recv_unicode() # This also blocks

print('Pong got request:', req)

sock.send_unicode('pong %s' % i)

if __name__ == '__main__':

pong_proc = multiprocessing.Process(target=pong)

pong_proc.start()

ping()

pong_proc.join()

So this is very easy and no that much code. The problem with this is, that it only works well if your process only uses one socket. Unfortunately, in larger applications that is rather rarely the case.

A way to handle multiple sockets per process is polling. In addition to your context and socket(s), you need a poller. You also have to tell it which events on which socket you are going to poll:

# polling.py

def pong():

"""Waits for ping requests and replies with a pong."""

context = zmq.Context()

sock = context.socket(zmq.REP)

sock.bind(addr)

# Create a poller and register the events we want to poll

poller = zmq.Poller()

poller.register(sock, zmq.POLLIN|zmq.POLLOUT)

for i in range(10):

# Get all sockets that can do something

socks = dict(poller.poll())

# Check if we can receive something

if sock in socks and socks[sock] == zmq.POLLIN:

req = sock.recv_unicode()

print('Pong got request:', req)

# Check if we cann send something

if sock in socks and socks[sock] == zmq.POLLOUT:

sock.send_unicode('pong %s' % (i // 2))

poller.unregister(sock)

You see, that our pong function got pretty ugly. You need 10 iterations to do five ping-pongs, because in each iteration you can either send or reply. And each socket you add to your process adds two more if-statements. You could improve that design if you created a base class wrapping the polling loop and just register sockets and callbacks in an inheriting class.

That brings us to our final example. PyZMQ comes with with an adapted Tornado eventloop that handles the polling and works with ZMQStreams, that wrap sockets and add some functionality:

# eventloop.py

from zmq.eventloop import ioloop, zmqstream

class Pong(multiprocessing.Process):

"""Waits for ping requests and replies with a pong."""

def __init__(self):

super().__init__()

self.loop = None

self.stream = None

self.i = 0

def run(self):

"""

Initializes the event loop, creates the sockets/streams and

starts the (blocking) loop.

"""

context = zmq.Context()

self.loop = ioloop.IOLoop.instance() # This is the event loop

sock = context.socket(zmq.REP)

sock.bind(addr)

# We need to create a stream from our socket and

# register a callback for recv events.

self.stream = zmqstream.ZMQStream(sock, self.loop)

self.stream.on_recv(self.handle_ping)

# Start the loop. It runs until we stop it.

self.loop.start()

def handle_ping(self, msg):

"""Handles ping requests and sends back a pong."""

# req is a list of byte objects

req = msg[0].decode()

print('Pong got request:', req)

self.stream.send_unicode('pong %s' % self.i)

# We’ll stop the loop after 5 pings

self.i += 1

if self.i == 5:

self.stream.flush()

self.loop.stop()

This even adds more boilerplate code, but it will pay of if you use more sockets and most of that stuff in run() can be put into a base class. Another drawback is, that the IOLoop only uses recv_multipart(). So you always get a lists of byte strings which you have to decode or deserialize on your own. However, you can use all the send methods socket offers (like send_unicode() or send_json()). You can also stop the loop from within a message handler.

In the next sections, I’ll discuss how you could implement a PyZMQ process that uses the event loop.

Communication Design

Before you start to implement anything, you should think about what kind of processes you need in your application and which messages they exchange. You should also decide what kind of message format and serialization you want to use.

PyZMQ has built-in support for Unicode (send sends plain C strings which map to Python byte objects, so there’s a separate method to send Unicode strings), JSON and Pickle.

JSON is nice, because it’s fast and lets you integrate processes written in other languages into you application. It’s also a bit safer, because you cannot receive arbitrary objects as with pickle. The most straightforward syntax for JSON messages is to let them be triples [msg_type, args, kwargs], where msg_type maps to a method name and args and kwargs get passed as positional and keyword arguments.

I strongly recommend you to document each chain of messages your application sends to perform a certain task. I do this with fancy PowerPoint graphics and with even fancier ASCII art in Sphinx. Here is how I would document our ping-pong:

Sending pings

-------------

* If the ping process sends a *ping*, the pong processes responds with a

*pong*.

* The number of pings (and pongs) is counted. The current ping count is

sent with each message.

::

PingProc PongProc

[REQ] ---1--> [REP]

<--2---

1 IN : ['ping, count']

1 OUT: ['ping, count']

2 IN : ['pong, count']

2 OUT: ['pong, count']

First, I write some bullet points that explain how the processes behave and why

they behave this way. This is followed by some kind of sequence diagram that

shows when which process sents which message using which socket type. Finally,

I write down how the messages are looking. # IN is what you would pass to

send_multipart and # OUT is, what is received on the other side by

recv_multipart. If one of the participating sockets is a ROUTER or

DEALER, IN and OUT will differ (though that’s not the case in this

example). Everything in single quotation marks (') represents a JSON

serialized list.

If our pong process used a ROUTER socket instead of the REP socket, it would look like this:

1 IN : ['ping, count'] 1 OUT: [ping_uuid, '', 'ping, count'] 2 IN : [ping_uuid, '', 'pong, count'] 2 OUT: ['pong, count']

This seems like a lot of tedious work, but trust me, it really helps a lot when you need to change something a few weeks later!

Application Design

In the examples above, the Pong process was responsible for setting everything up, for receiving/sending messages and for the actual application logic (counting incoming pings and creating a pong).

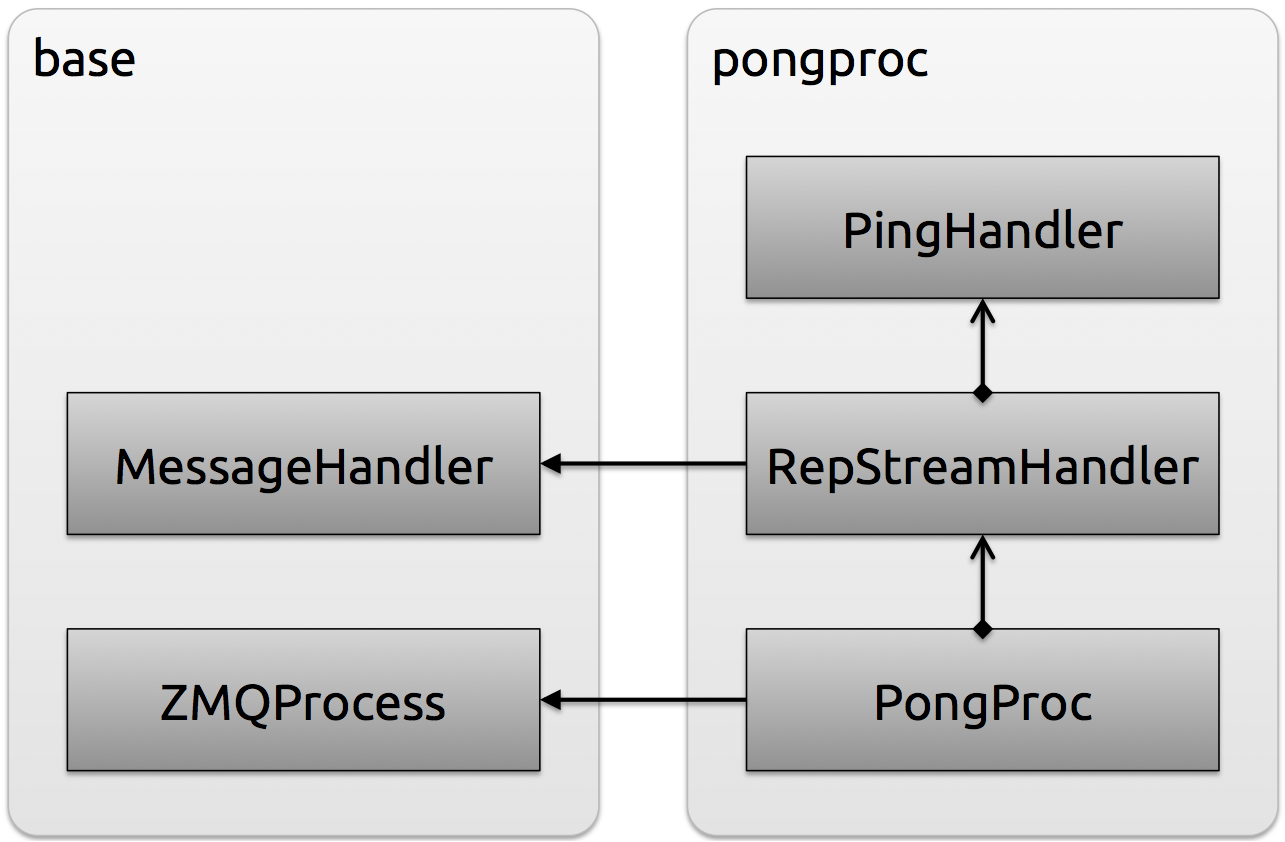

Obviously, this is not a very good design (at least if your application gets more complex than our little ping-pong example). What we can do about this is to put most of that nasty setup stuff into a base class which all your processes can inherit from, separate message handling and (de)serialization from it and finally put all the actual application logic into a separate (PyZMQ-independent) class. This will result in a three-level architecture:

- The lowest tier will contain the entry point of the process, set-up everything and start the event loop. A common base class provides utilities for creating sockets/streams and setting everything up.

- The second level is message handling and (de) serialization. A base class performs the (de)serialization and error handling. A message handler inherits this class and implements a method for each message type that should be handled.

- The third level will be the application logic and completely PyZMQ-agnostic.

Base classes should be defined for the first two tiers two reduce redundant code in multiple processes or message handlers. The following figure shows the five classes our process is going to consist of:

ZmqPocess – The Base Class for all Processes

The base class basically implements two things:

- a setup method that creates a context an a loop

- a stream factory method for streams with a on_recv callback. It creates a socket and can connect/bind it to a given address or bind it to a random port (that’s why it returns the port number in addition to the stream itself).

It also inherits multiprocessing.Process so that it is easier to spawn it as sub-process. Of course, you can also just call its run() method from you main().

# example_app/base.py

import multiprocessing

from zmq.eventloop import ioloop, zmqstream

import zmq

class ZmqProcess(multiprocessing.Process):

"""

This is the base for all processes and offers utility functions

for setup and creating new streams.

"""

def __init__(self):

super().__init__()

self.context = None

"""The ØMQ :class:`~zmq.Context` instance."""

self.loop = None

"""PyZMQ's event loop (:class:`~zmq.eventloop.ioloop.IOLoop`)."""

def setup(self):

"""

Creates a :attr:`context` and an event :attr:`loop` for the process.

"""

self.context = zmq.Context()

self.loop = ioloop.IOLoop.instance()

def stream(self, sock_type, addr, bind, callback=None, subscribe=b''):

"""

Creates a :class:`~zmq.eventloop.zmqstream.ZMQStream`.

:param sock_type: The ØMQ socket type (e.g. ``zmq.REQ``)

:param addr: Address to bind or connect to formatted as *host:port*,

*(host, port)* or *host* (bind to random port).

If *bind* is ``True``, *host* may be:

- the wild-card ``*``, meaning all available interfaces,

- the primary IPv4 address assigned to the interface, in its

numeric representation or

- the interface name as defined by the operating system.

If *bind* is ``False``, *host* may be:

- the DNS name of the peer or

- the IPv4 address of the peer, in its numeric representation.

If *addr* is just a host name without a port and *bind* is

``True``, the socket will be bound to a random port.

:param bind: Binds to *addr* if ``True`` or tries to connect to it

otherwise.

:param callback: A callback for

:meth:`~zmq.eventloop.zmqstream.ZMQStream.on_recv`, optional

:param subscribe: Subscription pattern for *SUB* sockets, optional,

defaults to ``b''``.

:returns: A tuple containg the stream and the port number.

"""

sock = self.context.socket(sock_type)

# addr may be 'host:port' or ('host', port)

if isinstance(addr, str):

addr = addr.split(':')

host, port = addr if len(addr) == 2 else (addr[0], None)

# Bind/connect the socket

if bind:

if port:

sock.bind('tcp://%s:%s' % (host, port))

else:

port = sock.bind_to_random_port('tcp://%s' % host)

else:

sock.connect('tcp://%s:%s' % (host, port))

# Add a default subscription for SUB sockets

if sock_type == zmq.SUB:

sock.setsockopt(zmq.SUBSCRIBE, subscribe)

# Create the stream and add the callback

stream = zmqstream.ZMQStream(sock, self.loop)

if callback:

stream.on_recv(callback)

return stream, int(port)

PongProc – The Actual Process

The PongProc inherits ZmqProcess and is the main class for our process. It creates the streams, starts the event loop and dispatches all messages to the appropriate handlers:

# example_app/pongproc.py

import zmq

import base

host = '127.0.0.1'

port = 5678

class PongProc(base.ZmqProcess):

"""

Main processes for the Ponger. It handles ping requests and sends back

a pong.

"""

def __init__(self, bind_addr):

super().__init__()

self.bind_addr = bind_addr

self.rep_stream = None

self.ping_handler = PingHandler()

def setup(self):

"""Sets up PyZMQ and creates all streams."""

super().setup()

# Create the stream and add the message handler

self.rep_stream, _ = self.stream(zmq.REP, self.bind_addr, bind=True)

self.rep_stream.on_recv(RepStreamHandler(self.rep_stream, self.stop,

self.ping_handler))

def run(self):

"""Sets up everything and starts the event loop."""

self.setup()

self.loop.start()

def stop(self):

"""Stops the event loop."""

self.loop.stop()

If you are going to start this process as a sub-process via start, make sure everything you instantiate in __init__ is pickle-able or it won’t work on Windows (Linux and Mac OS X use fork to create a sub-process and fork just makes a copy of the main process and gives it a new process ID. On Windows, there is no fork and the context of your main process is pickled and sent to the sub-process).

In setup, call super().setup() before you create a stream or you won’t

have a loop instance for them. We call setup from run, because the

context must be created within the new system process, which wouldn’t be the

case if we called setup from __init__.

The stop method is not really necessary in this example, but it can be used

to send stop messages to sub-processes when the main process terminates and to

do other kinds of clean-up. You can also execute it if you except

a KeyboardInterrupt after calling run.

MessageHandler — The Base Class for Message Handlers

A PyZMQ message handler can be any callable that accepts one argument—the list of message parts as byte objects. Hence, our MessageHandler class needs to implement __call__:

# exmaple_app/base.py

from zmq.utils import jsonapi as json

class MessageHandler(object):

"""

Base class for message handlers for a :class:`ZMQProcess`.

Inheriting classes only need to implement a handler function for each

message type.

"""

def __init__(self, json_load=-1):

self._json_load = json_load

def __call__(self, msg):

"""

Gets called when a messages is received by the stream this handlers is

registered at. *msg* is a list as return by

:meth:`zmq.core.socket.Socket.recv_multipart`.

"""

# Try to JSON-decode the index "self._json_load" of the message

i = self._json_load

msg_type, data = json.loads(msg[i])

msg[i] = data

# Get the actual message handler and call it

if msg_type.startswith('_'):

raise AttributeError('%s starts with an "_"' % msg_type)

getattr(self, msg_type)(*msg)

As you can see, it’s quite simle. It just tries to JSON-load the index defined

by self._json_load. We earlier defined, that the first element of the

JSON-encoded message defines the message type (e.g., ping). If an attribute

of the same name exists in the inheriting class, it is called with the remainer

of the message.

You can also add logging or additional security measures here, but that is not necessary here.

RepStreamHandler — The Concrete Message Handler

This class inherits the MessageHandler I just showed you and is used in PongProc.setup. It defines a handler method for ping messages and the plzdiekthxbye stop message. In its __init__ it receives references to the rep_stream, PongProcs stop method and to the ping_handler, our actual application logic:

# example_app/pongproc.py

class RepStreamHandler(base.MessageHandler):

"""Handels messages arrvinge at the PongProc’s REP stream."""

def __init__(self, rep_stream, stop, ping_handler):

super().__init__()

self._rep_stream = rep_stream

self._stop = stop

self._ping_handler = ping_handler

def ping(self, data):

"""Send back a pong."""

rep = self._ping_handler.make_pong(data)

self._rep_stream.send_json(rep)

def plzdiekthxbye(self, data):

"""Just calls :meth:`PongProc.stop`."""

self._stop()

PingHandler – The Application Logic

The PingHandler contains the actual application logic (which is not much, in this example). The make_pong method just gets the number of pings sent with the ping message and creates a new pong message. The serialization is done by PongProc, so our Handler does not depend on PyZMQ:

# example_app/pongproc.py

class PingHandler(object):

def make_pong(self, num_pings):

"""Creates and returns a pong message."""

print('Pong got request number %s' % num_pings)

return ['pong', num_pings]

Summary

Okay, that’s it for now. I showed you three ways to use PyZMQ. If you have a very simple process with only one socket, you can easily use its blocking recv methods. If you need more than one socket, I recommend using the event loop. And polling … you don’t want to use that.

If you decide to use PyZMQ’s event loop, you should separate the application logic from all the PyZMQ stuff (like creating streams, sending/receiving messages and dispatching them). If your application consists of more then one process (which is usually the case), you should also create a base class with shared functionality for them.

In the next part, I’m going to talk about how you can test your application.